-

AI could require as much electricity as Sweden by 2027

-

By 2027, AI servers could use between 85 to 134 terawatt hours annually

-

AI server chip supply chain and manufacturing issues should be solved within a few years

Artificial intelligence (AI) could soon require as much electricity as a countries like Argentina, Sweden or the Netherlands, the New York Times reported.

This might come as a shock to the general populace but won’t surprise the AI and cloud-savvy readers of Silverlinings. We’ve been covering the topic for a couple of months now.

The Gray Lady got the figures for their piece from a peer-reviewed analysis by PhD candidate Alex de Vries. The NYT reports that, even with conservative estimates, by 2027 AI servers could use between 85 to 134 terawatt hours (Twh) annually. So, AI could use as much electricity as heavily-populated European country within a few years.

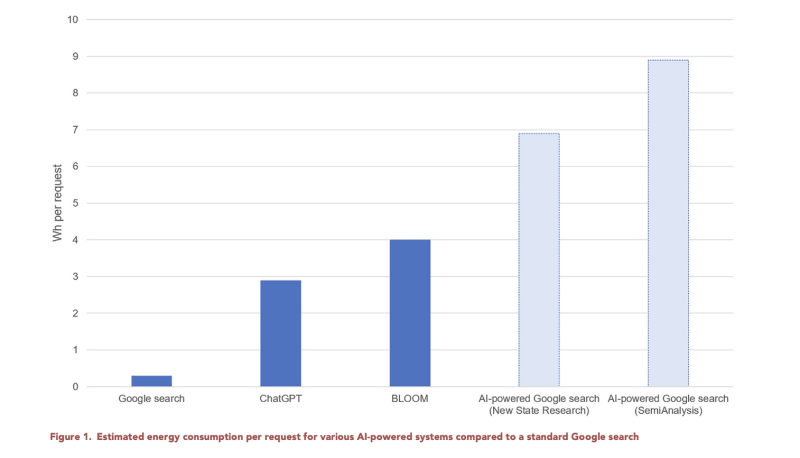

Check out the Wh per request stats that De Vries projects in the chart below:

Nvidia, and some other early AI chip players will start strong but slow on the electricity consumption scale. De Vries noted in his report that Nvidia, which is the largest AI server chip supplier right now, will use an “almost negligible” amount of power with its AI server chips in 2023, “compared to the historical estimated annual electricity consumption of data centers, which was 205 TWh,” the report said.

In fact, AI could quickly replace cryptocurrency mining as the environment-destroyer in more ways than one. Wrote De Vries in his report: "It is already the case that former cryptocurrency miners using such GPUs have started to repurpose their computing power for AI-related tasks."

He continued: "Many of these GPUs were left redundant in September of 2022, when Ethereum, the second-largest cryptocurrency, replaced its energy-intensive mining algorithm with a more sustainable alternative. The change was estimated to have reduced Ethereum’s total power demand by, at most, 9.21 GW.14 This equates to 80.7 TWh of annual electricity consumption. It has been suggested that 20% of the GPUs formerly used by Ethereum miners could be repurposed for use in AI, in a trend referred to as 'mining 2.0.’'"

So Silverliners, it seems like GPUs deemed too energy intensive for Ethereum mining will find second life in AI.

To quote Homer Simpson, "D'oh!"

The report notes that Nvidia, and others, will be stymied by major semiconductor manufacturer TSMC in the next few years, as the manufacturer sorts out its supply chain and manufacturing issues.

By 2027, however, the report that said that Nvidia alone could be shipping “1.5 million of its AI server units.” This will drastically increase the power requirements of AI server chips.

“At this stage, these servers could represent a significant contribution to worldwide data center electricity consumption,” the report said.

And, of course, Nvidia isn’t the only chip designer planning to put their stamp on the AI market.

As Silverlinings has previously reported, the chips aren’t the only part of the AI equation, even if they make up a large part of the power equation.

AI models themselves — particularly unrefined or untrained models — use an awful lot of power since they require massive server farms to implement deep learning training. It is suggested that as enterprises start to train their own AI models these will use an enormous amount of data to continually train on. Hussein Hallak, co-founder of AI art firm Momentable told Silverlinings in September that enterprise AI use could be biggest energy consumption factor in the future.

We’ll be sure to keep you all updated on the AI energy issue as the field develops further.

Want to discuss AI workloads, automation and data center physical infrastructure challenges with us? Meet us in Sonoma, Calif., from Dec. 6-7 for our Cloud Executive Summit. You won't be sorry.