-

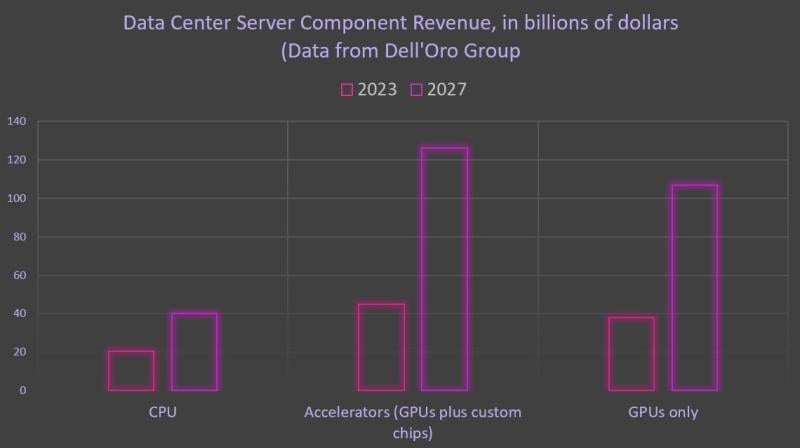

GPU revenue has already surpassed CPU revenue, according to Dell’Oro Group data

-

Accelerator revenue is set to nearly triple by 2027

-

AMD is coming for Nvidia, but Dell’Oro’s Baron Fung said hyperscalers are actually a bigger threat

You knew the artificial intelligence (AI) boom was big, but did you know it was big enough to push GPU revenue past CPU revenue for the first time ever in 2023? The trend of GPU revenue outpacing CPU sales is only expected to continue, Dell’Oro Group Senior Research Director Baron Fung told Silverlinings.

Data center accelerator revenue, which includes GPUs as well as custom chips built by hyperscalers, jumped 224 percent in 2023 to roughly $45 billion, Fung said. By 2027, that number is expected to nearly triple, he added.

Take a look.

(For what it’s worth, AMD’s CEO has said she expects the AI chip market to be worth $400 billion by 2027. New Street Research in January calculated that this implies an installed based of 10 million AI servers and $800 billion in total spending for the servers plus the buildings, networking, power and cooling required to run them. That all would require growth in several areas to “materially beat current expectations,” they said. But we digress.)

CPUs remain the jack of all trades. But Fung noted that as applications (including AI) have become bigger and grown to require more power, cloud and chip companies have been forced to turn to accelerators to perform compute tasks more efficiently.

Anyone who has looked at the news lately knows Nvidia basically owns the GPU market with more than 80% market share. But Fung noted that if AMD can execute on the strong guidance it recently put out, it could gain market share and end up at around 10%.

Fung added, though, that the real threat to Nvidia’s dominance isn’t AMD or even Intel but the hyperscalers themselves. He explained this is because hyperscalers are increasingly using chips purpose built in-house.

“Their own AI chip deployments are probably a bigger competitor to Nvidia than AMD,” he told us. “We’re seeing quite a bit of deployments of their own chips.”

For instance, he noted Google uses its in-house TPU for internal workloads and also rents these out to a handful of outside customers as well. Amazon Web Services, meanwhile is making its Trainium, Inferentia and Graviton processors available to public cloud users. And Microsoft is also planning to deploy AI clusters using its own chips this year.

Why? Well, Fung said, the supply chain chaos of the pandemic still looms large in the rearview, and hyperscalers don’t want to deal with the risk associated with having only one supplier (coughNvidiacough).

Competitive landscape aside, Fung said there is one big question floating around in the ether: How long can hyperscalers maintain current and growing GPU levels?

“This is one thing people keep asking, whether or not AI investments are sustainable,” he said.

The key, Fung said, is maximizing return on investment through moves like vertical integration and extending the lifespan of general purpose servers to free up money for AI investments.

Of course, AMD recently had something to say about this, which you can read about here.