If there’s one thing that everyone in the wireless industry agrees on, it’s that edge computing is a thing.

“There’s no doubt in my mind that this is a thing,” said Igal Elbaz, AT&T’s SVP of wireless network architecture and design. “Now it’s a question of timing, of deployment, of getting people engaged, and shaping the use cases and the development and optimization. But it’s a thing.”

Elbaz isn’t alone.

“The edge is here and it’s real,” wrote the analysts at Wall Street research firm Credit Suisse in a recent report. “Edge data centers are already in production with signed customers, including Akamai, Oath (Yahoo and AOL, now known as Verizon), Fastly, Cloudflare and Limelight, to name a few, all targeted to be deployed at the edge.”

“The mobile edge compute is a clear part of our strategy,” said Verizon’s Greg Dial, executive director of 4G and 5G planning, explaining that Verizon in recent years has transitioned its network architecture to support a centralized RAN design, wherein one central location can handle the computing and management chores for a group of nearby network antennas. That centralized design, Dial said, creates a good foundation to build an edge computing service.

So what’s driving all this interest? It’s relatively simple, really: People are using increasingly complex data services like Siri, Alexa and virtual reality. An edge computing design – where queries are processed in a data center geographically closer to an end user—can make those communications go faster by lowering the network latency. (Latency is the time it takes a byte to traverse a network—like when you say, “hey Alexa” and it responds with a “yes?”)

This would represent a major change from most of today’s computing designs, where queries are sent hundreds or thousands of miles away to be processed in a data center.

"Every generation of the iPhone uses 2x more data,” said Intel’s Asha Keddy, GM of the company’s next generation and standards. Keddy explained that all of that data—coupled with technological advances like artificial intelligence that only really work in when real-time interactions are possible—is creating a need for faster and more powerful computing. "If I have something at the edge, then my phone can connect and this information is here and it's not going anywhere, and it's more real time."

“According to Gartner, there will be an estimated 25.1 billion devices connected to the internet by 2021, up from 3.6 billion in 2016,” wrote the analysts at Wall Street research firm Barclays. “This will require resources to be located closer to the edge of the network so they can be accessed with minimum latency and jitter while securing maximum bandwidth speeds. For example, in the future, self-driving cars will not only have to communicate with other cars but also the infrastructure around them. This will present an opportunity for edge data center providers given the needs to process data flow from vehicle-to-vehicle and vehicle-to-infrastructure.”

Demoing edge computing

In fact, in recent years there have been some fairly compelling edge computing demonstrations that show how this technology might make things go faster. Here are a few:

-

As noted by Data Center Frontier, LinkedIn tested an edge computing setup and in five facilities—two in the United States, two in India and one in China—that each housed 8 to 16 servers and one switch, and supported 50 Gbps peering and transit. “The areas where LinkedIn deployed Edge Connect units saw a 15 to 50% improvement in the user experience,” the publication reported.

-

Nokia and Notre Dame's Wireless Institute last year tested a mobile edge computing deployment at the college’s football stadium and streamed four videos in real time with a less than 500-millisecond delay.

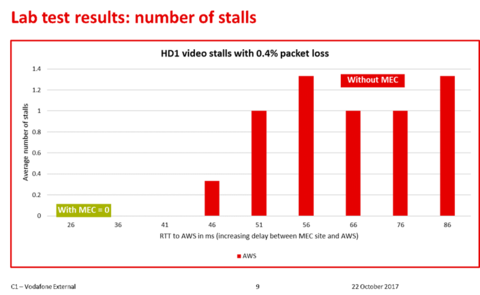

- Vodafone and Saguna Networks showed that a test of Saguna’s edge computing products resulted in no stalls of streamed video, compared with multiple stalls in a standard wireless network design:

So, it’s clear that edge computing might be able to seriously speed up things like video streaming and data access—and that it might be essential to services like real-time, cloud-based virtual reality gaming or high-speed, vehicle-to-vehicle communications. As AT&T’s Elbaz explained, if you want to stream a virtual reality video, the network latency has to be less than 30 ms because otherwise you’ll get sick since your movements won’t match up to the visuals you’re seeing. And in a traditional cloud architecture, average latency speeds can be 80-100 ms, "which is not fast enough for some of the immersive experiences that we are all talking about,” Elbaz said.

To solve this problem, edge computing would bring the computing element geographically closer to an end user—closer to the “edge” of the network, so to speak. Meaning, if you live in Denver, a cloud-based VR service would work a lot better if it was streamed from a data center physically located in Denver, rather than having to stream that data from somewhere like Nevada or Dallas.

And that’s exactly what a number of startups and other players are planning to do—they want to build mini data centers in locations around the country (like at the base of a cell tower) in order to process data closer to where users are making their requests. For example, startup Packet just raised $25 million in a series B round of funding in part to help it build 50 new edge computing sites. Similarly, startup Vapor IO has plans to build 100 “micro data centers” for edge computing in locations around the country by the end of next year. And another startup, EdgeMicro, announced plans to deploy micro-data centers in 30 cities around the U.S. for an unnamed cellular partner.

Deployment details and challenges

Of course, there are some seriously complex problems that the industry will need to address before these edge computing locations can be put to work in any major way.

“We found continued evidence that the edge is still being defined, with application, latency and various use cases, all offering a variety of reasons for why the edge matters and will continue to increasingly matter over time,” the analysts at Wall Street research firm Credit Suisse wrote in a recent report.

"There's a lot of questions about, what do you expose and how do you expose this?” explained Elbaz. “One of the many reasons why public cloud [like Amazon AWS or Microsoft Azure] has been so successful is that they made it very easy for developers to go and consume their infrastructure. So how do you build the same experience [for edge computing], and is it exactly the same experience? Or should we build something else? How do you expose latency? So, these are the kinds of interesting questions that the industry is looking at.”

AT&T, for its part, last year built an edge computing test center to answer those questions. The company said it worked with VR company GridRaster to see exactly how edge computing might improve user experiences, and it found that questions remain: “Our experimentation uncovered additional nuances,” AT&T’s Alisha Seam wrote in a blog post earlier this month about the carrier’s edge computing tests.

“We believe that network optimization is critical to enable mobile, cloud-based immersive media. But that’s not enough. First, we believe companies in this ecosystem need to streamline functions throughout the entire capture and rendering pipeline and devise new techniques to distribute functions between the cloud and mobile devices. Second, we discovered that the most notable benefits of edge computing come from delay predictability, rather than the amount of delay itself. We therefore believe that cloud-based immersive media applications will likely benefit from network functions and applications working more synergistically in real-time.”

Elbaz said that AT&T is developing its Akranio platform in part to create that needed socket for developers to plug into. After all, if you’re Epic Games and you want to make use of edge computing for a virtual reality version of your popular Fortnite video game, how would you go about working with AT&T and others to do so? Akranio might serve as that socket Epic Games could plug into.

But AT&T’s Akranio isn’t the only option out there, in terms of edge computing interfaces and platforms. For example, Deutsche Telekom and Aricent just today announced the creation of their “Open Source, low latency Edge compute platform.” Open19, supported by Vapor IO and others, could be another such platform.

The edge computing market could reach $4.4 billion through 2023, according to research firm Mobile Experts, if such issues get ironed out. And analyst Joe Madden noted that the edge computing trend ties nicely into other movements in the telecom space, such as deployments of virtualized systems, distributed network architectures and 5G.

“The edge is the convergence of a lot of these capabilities,” agreed Elbaz.

But a full-blown edge computing opportunity likely won’t show up in the wireless industry until there’s some consensus on how to go about deploying this technology so that players like Epic Games can actually use it—and make money from it. – Mike | @mikeddano

Editor's Corners are opinion columns written by a member of the Fierce editorial team. They are edited for balance and accuracy.