-

Amdocs is working with Nvidia to train telco data for GenAI

-

It's using data that it has accumulated over decades

-

The company’s ‘Amaizing’ GenAI platform can drastically cut down on customer call times

Amdocs is working with Nvidia to bring generative AI (GenAI) to its OSS/BSS services that it provides for telcos. Amdocs’s GenAI platform is called "Amaiz," and the company says it is truly amazing how much time it can reduce for some customer service calls.

For its part, Nvidia has become famous for its graphical processing unit (GPU) chips that support the top GenAI foundation models. But you may not know that Nvidia also hosts a “model garden.”

Anthony Goonetilleke, group president of technology and head of strategy at Amdocs, said Nvidia is building a software ecosystem above its hardware. Nvidia’s DGX cloud provides an environment for companies to work with a variety of large language models (LLMs).

Amdocs already works with Microsoft’s Azure open AI service. But Amdocs is also using Nvidia’s model garden to access various LLMs, especially open source LLMs such as Mistral and Llama.

“We were the first to try out an interesting concept called LLM jury,” said Goonetilleke. An LLM jury allowed Amdocs to train data on one LLM and then use a different LLM to gauge the quality of the results.

He said Amdocs also has been working with Nvidia to reduce the number of tokens needed for a query. He explained that tokens are the method by which a GenAI platform charges for its services. Prompts that are input into the platform are charged tokens based on their number of characters. And outputs from the GenAI platform are also charged tokens based on the number of characters.

“So it’s very important to optimize the number of tokens that go in because once you scale this, it costs a lot of money,” said Goonetilleke.

'Amaizing' customer service

Amdocs’ collaboration with Nvidia has focused on using GenAI to transform customer experiences and improve operational efficiencies.

In customer service, Amdocs is training GenAI models on data that it’s accumulated over decades. “We are using this to train our models to understand the telecom taxonomy,” said Goonetilleke. “When you ask it a question, contextually it’s aware of the environment and what it’s working with.”

Amdocs has been experimenting with its smart agent for customer care with several telco customers.

When a customer calls with a question about their bill, it typically takes about 15 minutes for the customer service rep to review their bill history and come up with an answer. Using the Amaiz platform, that time can be cut down to 30 seconds.

Goonetilleke said this can save telcos an enormous amount of money. When a customer service rep answers a call it immediately costs the telco about $8.50. If the rep has to read through a customer’s billing history, the costs can really add up. He said Amaiz can save from 30-40% on costs.

“We are up to about 93% in terms of accuracy now,” he said. “You can get a private version of Chat GPT and start training it and giving it real time information and get to the 60 percents. If you use the Amaiz platform you get to 93%.”

Amdocs expects telco customers to start using a commercialized version of Amaiz within the next 12-18 months.

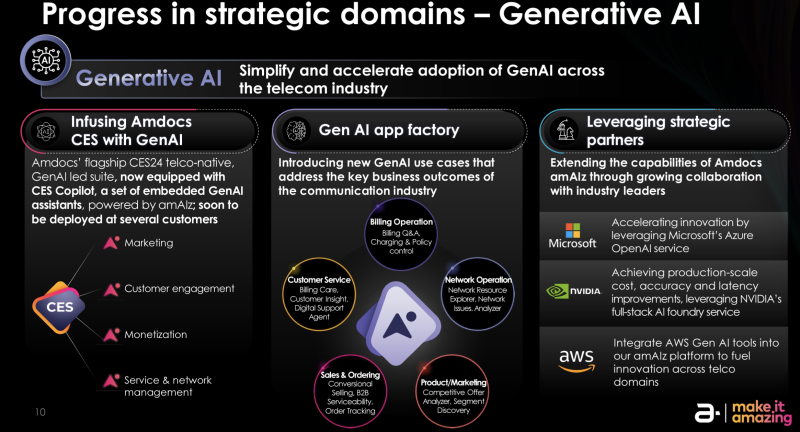

The company reported its fiscal Q2 2024 earnings this afternoon. And it published the below slide related to its AI status, as part of its earnings presentation.